2000StreetRod

Moderator Emeritus

- Joined

- May 26, 2009

- Messages

- 10,562

- Reaction score

- 375

- City, State

- Greenville, SC

- Year, Model & Trim Level

- 00 Sport FI, 03 Ltd V8

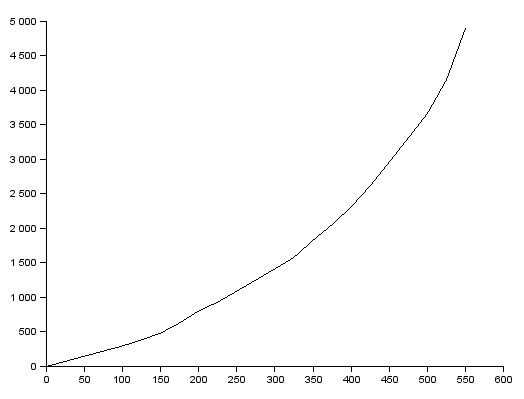

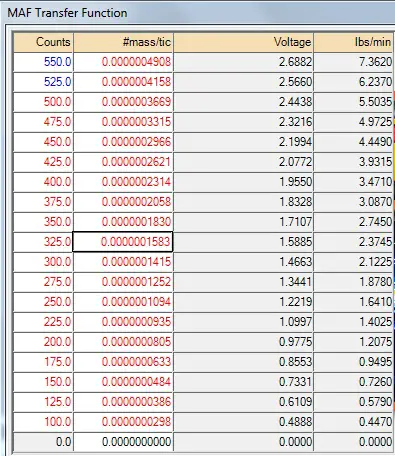

MTF modifications (continued)

I made some smoothing adjustments for 250, 275, 300, 450 and 475 MAF AD counts.

My goal is to get the actual AFR within a few percent of the commanded. Except for a brief period after engine start the PCM will normally be in closed loop and the STFTs will correct for any errors when the MAF AD count is below 500. Accuracy is more important during WOT when the PCM is in open loop.

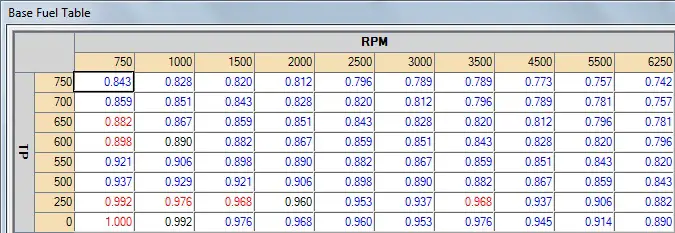

Tailoring the base fuel table for low to medium airflow MTF tuning variation and resolution worked out so I modified it for medium to high airflow MTF tuning.

It will be difficult for me to test the high airflows. My Sport is Toreador Red and the Dynomax VT muffler with 3 in. dia. tailpipe is loud at WOT above 2500 rpm. It probably can be heard for miles. The other day I did a short WOT pull to 5,000 rpm in 3rd speed and in just two minutes I had a local sheriff tailgating me. I'll have to do full performance testing on the dyno but I'd like to get the MTF reasonably close first.

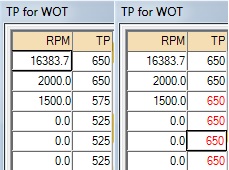

To avoid any WOT related fuel complications I altered the TP for WOT table.

For my Sport the TP for WOT is slightly above 750. I used 650 in this case to see if there are any obvious AFR changes when switching to/from WOT.

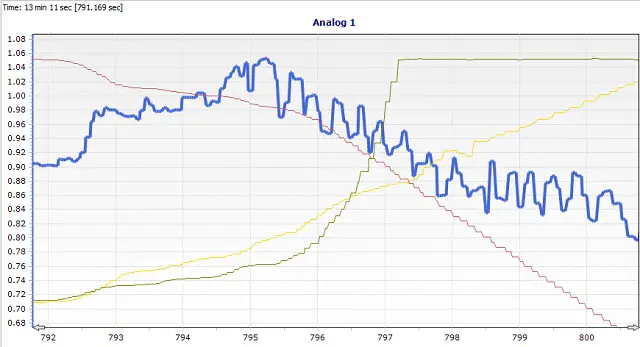

The Advantage III description for Aircharge WOT Multiplier states "This MUST be set to 1.9 on all cars, especially on newer models. This will basically limit the airflow that the PCM thinks is going into the engine and cause the engine to run very lean." My stock value is 1.02 but I set it to 1.00 to test the accuracy of the description. The plot below may be an example of what's being described. At tip in (brown = TP) the actual lambda (blue) goes lean while the commanded (purple) is richening. Yellow is engine speed.

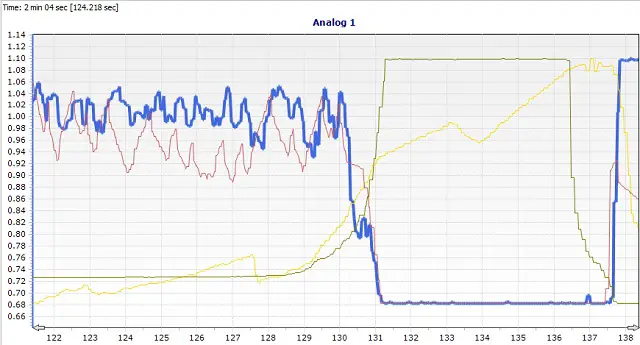

In the plot below for a tune I'm paying to have generated the Aircharge WOT Multiplier is set to 1.9 and the actual lambda does not go lean at tip in.

However, in that tune WOT was set at 550 or above which didn't occur until after time equals 131 seconds. Since I didn't notice anything weird happening with WOT set to 650 in my tune I'm going to lower it to 500 and set the Aircharge WOT Multiplier to 1.9 to see if that solves my lean condition at tip in.

The Advantage III description for Correction for Max Aircharge states that "Setting this value to 1.99, like the WOT Aircharge Multiplier, will prevent load from ever being clipped, meaning that load will always be actual load." I changed the .98 stock value to 1.99 even though I haven't noticed any load clipping.

After reviewing another datalog with the revised MTF and Base Fuel table I noticed that there is a significant increase in actual lambda when accelerating vs stable engine load for any given MAF AD count. During normal operation the PCM will be open loop when the specified TP values in the Fuel Open Loop TP table are exceeded. To avoid detonation it is important that the actual lambda is not leaner than the commanded lambda so I corrected the MTF using the acceleration data. I found that I needed to increase the MTF lbs flow about 5% from 300 to 550 MAF AD counts and decrease the MTF lbs flow about 5% at 850 MAF AD counts and above.

Click here to post comment on the discussion thread

I made some smoothing adjustments for 250, 275, 300, 450 and 475 MAF AD counts.

My goal is to get the actual AFR within a few percent of the commanded. Except for a brief period after engine start the PCM will normally be in closed loop and the STFTs will correct for any errors when the MAF AD count is below 500. Accuracy is more important during WOT when the PCM is in open loop.

Tailoring the base fuel table for low to medium airflow MTF tuning variation and resolution worked out so I modified it for medium to high airflow MTF tuning.

It will be difficult for me to test the high airflows. My Sport is Toreador Red and the Dynomax VT muffler with 3 in. dia. tailpipe is loud at WOT above 2500 rpm. It probably can be heard for miles. The other day I did a short WOT pull to 5,000 rpm in 3rd speed and in just two minutes I had a local sheriff tailgating me. I'll have to do full performance testing on the dyno but I'd like to get the MTF reasonably close first.

To avoid any WOT related fuel complications I altered the TP for WOT table.

For my Sport the TP for WOT is slightly above 750. I used 650 in this case to see if there are any obvious AFR changes when switching to/from WOT.

The Advantage III description for Aircharge WOT Multiplier states "This MUST be set to 1.9 on all cars, especially on newer models. This will basically limit the airflow that the PCM thinks is going into the engine and cause the engine to run very lean." My stock value is 1.02 but I set it to 1.00 to test the accuracy of the description. The plot below may be an example of what's being described. At tip in (brown = TP) the actual lambda (blue) goes lean while the commanded (purple) is richening. Yellow is engine speed.

In the plot below for a tune I'm paying to have generated the Aircharge WOT Multiplier is set to 1.9 and the actual lambda does not go lean at tip in.

However, in that tune WOT was set at 550 or above which didn't occur until after time equals 131 seconds. Since I didn't notice anything weird happening with WOT set to 650 in my tune I'm going to lower it to 500 and set the Aircharge WOT Multiplier to 1.9 to see if that solves my lean condition at tip in.

The Advantage III description for Correction for Max Aircharge states that "Setting this value to 1.99, like the WOT Aircharge Multiplier, will prevent load from ever being clipped, meaning that load will always be actual load." I changed the .98 stock value to 1.99 even though I haven't noticed any load clipping.

After reviewing another datalog with the revised MTF and Base Fuel table I noticed that there is a significant increase in actual lambda when accelerating vs stable engine load for any given MAF AD count. During normal operation the PCM will be open loop when the specified TP values in the Fuel Open Loop TP table are exceeded. To avoid detonation it is important that the actual lambda is not leaner than the commanded lambda so I corrected the MTF using the acceleration data. I found that I needed to increase the MTF lbs flow about 5% from 300 to 550 MAF AD counts and decrease the MTF lbs flow about 5% at 850 MAF AD counts and above.

Click here to post comment on the discussion thread